Description

DeepABIS is a convolutional neural network trained to classify bee species from images of bee wings.

Installation

The system can be tested by installing the web or, alternatively, the mobile version. For the web version, two services must be installed, the inference service and the web server. For a quick installation of the web version, we provide ready to use pre-built Docker images.

Web version

Using Docker

This is the recommended approach for a quick start.

Requirements:

- Docker >= 19

Inference service

Main repository: deepabis-inference

The web version sends its classification requests to this service, which loads up the network model, performs inferences and sends the results back to the PHP client. So we need to install this first:

- Run

docker pull deepabis/deepabis:inference - Create a bridge network. Both containers need to be in the same network

to communicate with each other. By creating a user-defined network, we can

use aliases for each container instead of using IP addresses.

docker network create deepabis-network - Run the container with

alias

inferencein our created network and expose port 9042:docker run -p 9042:9042 --net deepabis-network --network-alias inference deepabis/deepabis:inference

Now, our second cloud container (or native installation) will be able to reach the inference service.

Web server

Main repository: deepabis-cloud

- For a quick start, the following command pulls the

web server as a Docker image:

docker pull deepabis/deepabis-cloud - Then, start a simple web server by running

docker run -p 8000:8000 --net deepabis-network -e "INFERENCE_HOST=inference" deepabis/deepabis-cloud

This again creates a container on our custom network.

The website can then be viewed on localhost:8000.

Manually

Inference service

Main repository: deepabis-inference

- Requirements:

- Python >= 3.5.2

- Clone the inference repository:

git clone https://github.com/deepabis/deepabis-inference && cd deepabis-inference - Install the dependencies:

pip install -r requirements.txt - Run the server:

python server.py - The inference service now runs on port 9042.

Web server

Main repository: deepabis-cloud

- Requirements:

- PHP >= 7.3

- Composer >= 1.7

- Node >= 11.0

- NPM >= 6.0

- Clone the web repository:

git clone https://github.com/deepabis/deepabis-cloud && cd deepabis-cloud - Install PHP dependencies:

composer install -

Copy

.env.exampleto.env -

In the

.envfile, setINFERENCE_HOSTtolocalhost. - Install JS dependencies:

npm install - Bundle JS source code:

npm run production -

Run

php artisan serveto start up a simple HTTP server on port 8000. Alternatively, use Laravel’svalet(macOS) or Laravel Homestead which both come with nginx to serve the site. - Done!

Mobile version

Main repository: deepabis-mobile

- Requirements:

- Android Studio

- Android 8 or newer (for deployment on smartphones)

- Clone the mobile repository:

git clone https://github.com/deepabis/deepabis-mobile -

Open the cloned directory in Android Studio

-

Click

Build > Make Project. Gradle should synchronize the dependencies and build the project. - Run the application. For this, you need to configure a virtual device using the AVD manager or, alternatively, plug in a real Android device.

Usage

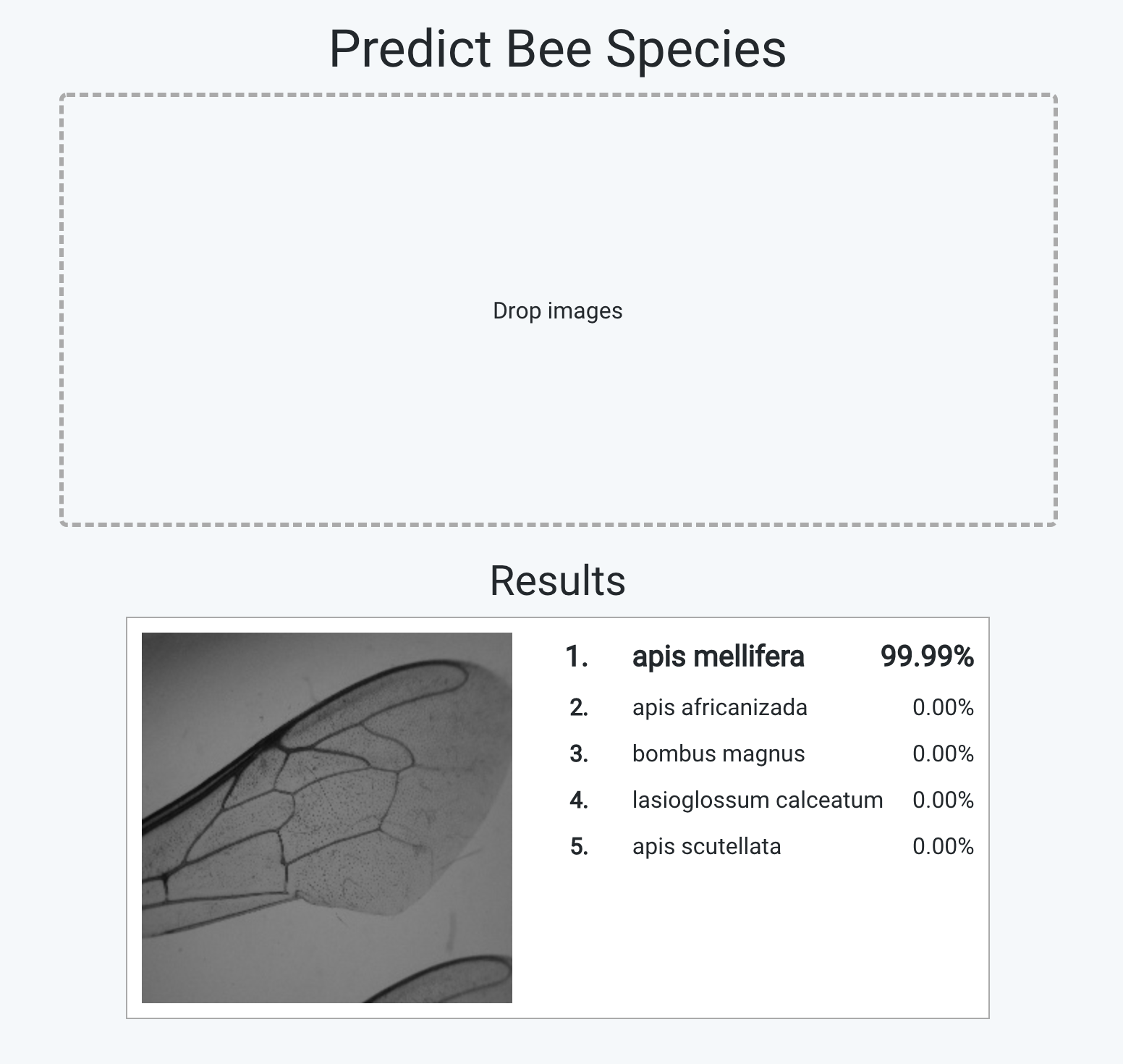

Web server

Drop any image (preferably depicting microscopic captures of bee wings) into the “Drop images” box. Assuming your installation was successful, the top 5 results will then be displayed below after a short delay.

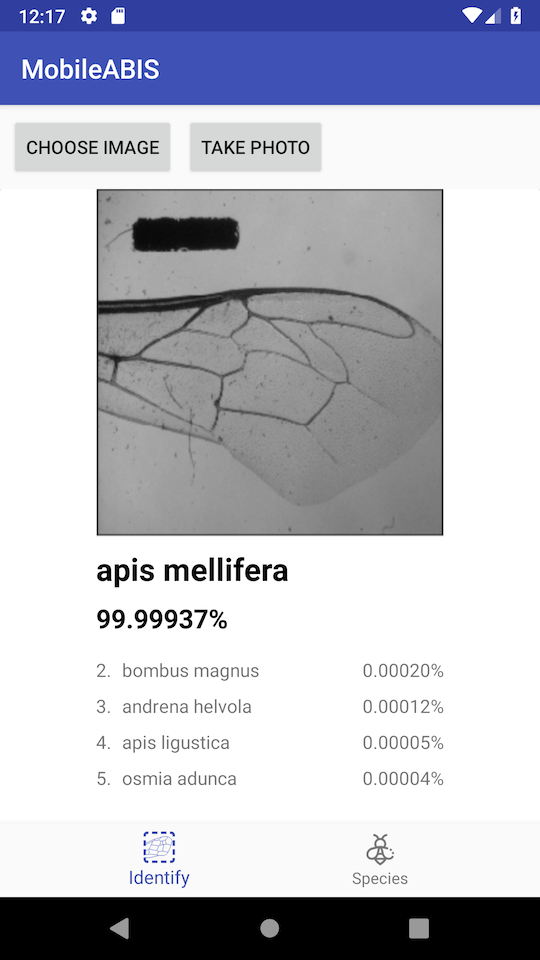

Mobile version

There are two views, the “Identify” and the “Species” view. In the “Identify” view, images can be classified. They can either be loaded by selecting an image from the device’s storage (by tapping “Choose Image”) or by directly taking a photo (tap the “Take Photo” button).

In the “Species” view, all available species of the classifier are listed.